|

Mengdi Li I am currently a postdoctoral researcher at King Abdullah University of Science and Technology (KAUST) in Saudi Arabia. I completed my Ph.D. in 2024 at the Knowledge Technology group at University of Hamburg in Germany, where I worked on reinforcement learning for embodied agents. My dissertation "Active Vision for Embodied Agents Using Reinforcement Learning" is publicly available. I welcome any anonymous feedback or suggestions regarding my work or myself. Email / Google Scholar / Twitter / Github / Linkedin |

|

Research

I specialize in researching data-driven decision-making, focusing on advancing reinforcement learning (RL) algorithms and applying them to real-world challenges, including large language models (LLMs) and robotics. My recent research focuses on topics such as reward modeling, RL from AI feedback, and LLM reasoning. I am particularly interested in exploring the learning paradigm that involves the joint optimization of policy models (through RL) and reward models (through supervised learning), with a focus on both methodological advancements and potential applications. I believe next-generation AI agents will require reward models with three essential characteristics:

|

News

|

Selected Publications(see all publications here)In reversed chronological order / * equal contributions |

|

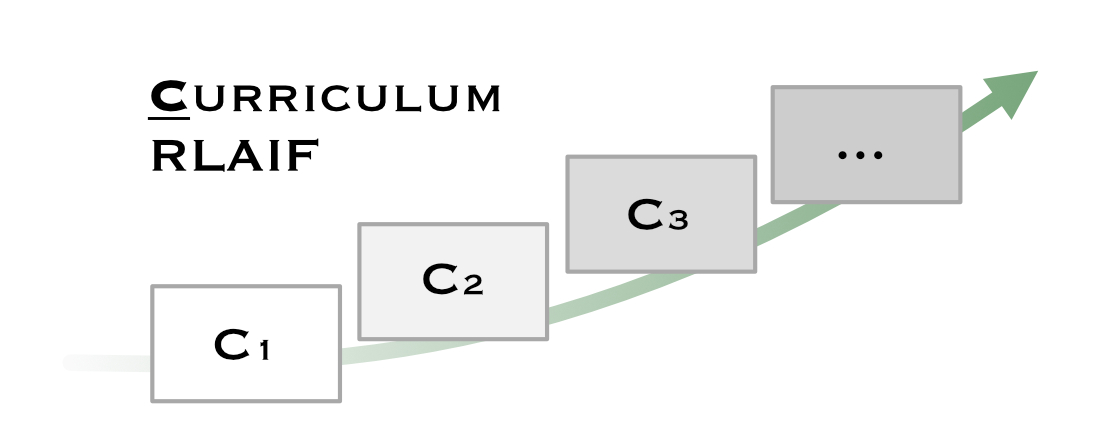

Curriculum-RLAIF: Curriculum Alignment with Reinforcement Learning from AI Feedback

Mengdi Li*, Jiaye Lin*, Xufeng Zhao, Wenhao Lu, Peilin Zhao, Stefan Wermter, Di Wang, arXiv, 2025 arXiv To improve the generalizability of reward models within the RLAIF paradigm, we introduce a novel framework called Curriculum-RLAIF, which generates preference pairs of varying difficulty levels to facilitate reward model training. |

|

Enhancing Zero-Shot Chain-of-Thought Reasoning in Large Language

Models through Logic

Xufeng Zhao, Mengdi Li, Wenhao Lu, Cornelius Weber, Jae Hee Lee, Stefan Wermter, LREC-COLING, 2024 project page / arXiv Aiming to improve the zero-shot chain-of-thought reasoning ability of LLMs, we propose LoT (Logical Thoughts), a neurosymbolic framework that leverages principles from symbolic logic to verify and revise the reasoning processes accordingly. |

|

Chat with the Environment: Interactive Multimodal Perception using

Large Language Models

Xufeng Zhao, Mengdi Li, Cornelius Weber, Burhan Hafez, Stefan Wermter, IROS, 2023 project page / code / video / arXiv / poster / slides We develop an LLM-centered modular network to provide high-level planning and reasoning skills and control interactive robot behaviour in a multimodal environment. |

|

Internally Rewarded Reinforcement Learning

Mengdi Li*, Xufeng Zhao*, Jae Hee Lee, Cornelius Weber, Stefan Wermter, ICML, 2023 project page / code / arXiv / poster We propose the clipped linear reward to stablize reinforcement learning where reward signals for policy learning are generated by a discriminator-based reward model that is dependent on and jointly optimized with the policy. |

|

Robotic Occlusion Reasoning for Efficient Object Existence

Prediction

Mengdi Li, Cornelius Weber, Matthias Kerzel, Jae Hee Lee, Zheni Zeng, Zhiyuan Liu, Stefan Wermter, IROS, 2021 code / video / arXiv We propose an RNN-based model that is jointly trained with supervised and reinforcement learning to achieve the task of predicting the existence of objects in occusion scenarios. |

|

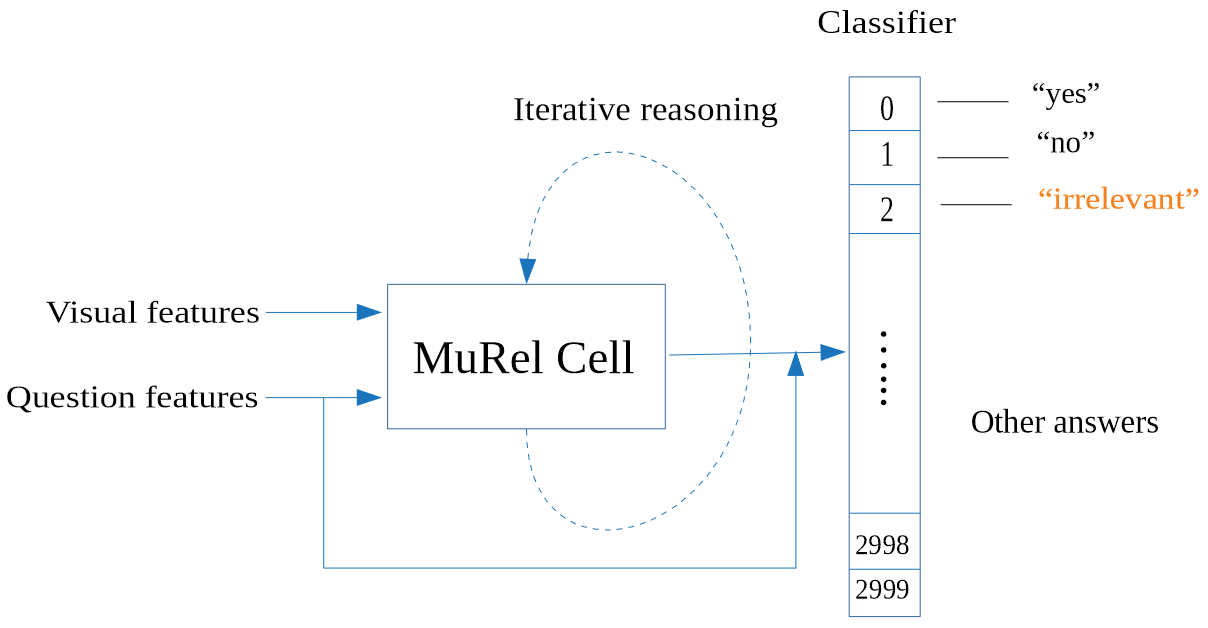

Neural Networks for Detecting Irrelevant Questions During Visual

Question Answering

Mengdi Li, Cornelius Weber, Stefan Wermter, ICANN, 2020 paper We demonstrate that an efficient neural network designed for VQA can achieve high accuracy on detecting the relevance of questions to images, however joint training the model on relevance detection and VQA leads to performance degradation on VQA. |

|

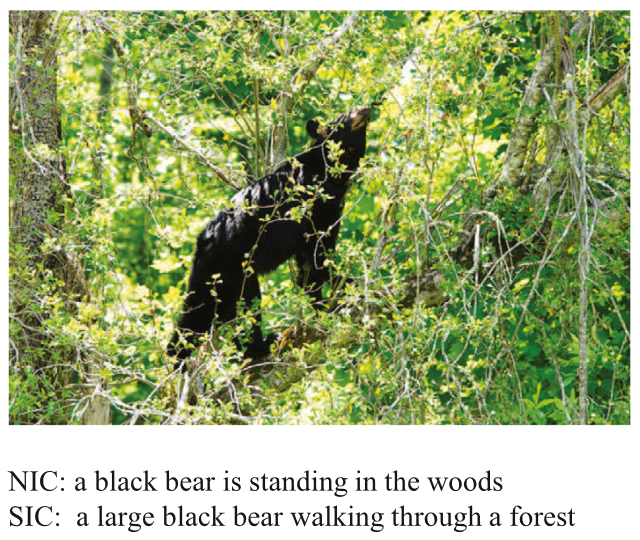

Generating Steganographic Image Description by Dynamic Synonym

Substitution

Mengdi Li, Kai Mu, Ping Zhong, Juan Wen, Yiming Xue, Signal Processing, 2019 paper We propose a novel image captioning model to automatically generate stego image descriptions. The proposed model is able to generate high-quality image descriptions in both human evaluation and statistical analysis. |

|

The template of this website is borrowed from Jon Barron. |